In the previous installment of the blog, we discussed our industries’ need to validate test methods in order to demonstrate that our measurement methods are capable of identifying variances inherent to production methods. Demonstrating suitability must include attempting to quantify or classify the variances that are inherent to the methods. The real trick to proving their suitability for use, is proving that we understand the upper limits of the potential measurement error, because the upper limit of the method uncertainty has to be added to the upper limit of the production uncertainty in order to defend the ability of the measurement system to reflect our manufacturing process in a way that is true and meaningful.

In the previous installment of the blog, we discussed our industries’ need to validate test methods in order to demonstrate that our measurement methods are capable of identifying variances inherent to production methods. Demonstrating suitability must include attempting to quantify or classify the variances that are inherent to the methods. The real trick to proving their suitability for use, is proving that we understand the upper limits of the potential measurement error, because the upper limit of the method uncertainty has to be added to the upper limit of the production uncertainty in order to defend the ability of the measurement system to reflect our manufacturing process in a way that is true and meaningful.

How we go about reaching this understanding is a matter of some debate in the medical device industry.

The FDA guidance on Test Method Validation is a wonderful tool for organizations performing chemical and biological laboratory analyses, concerned with quantifying and/or qualifying the components of drug product, but its value in that area is directly related to its specificity to those concerns. The scope of the guidance does not address systems designed to measure physical dimensions with solid state tools whose performance can drift with even nominal wear and tear.

In the absence of an agency voice, the industry adopted a tool developed decades ago by the automotive industry, and began using the terms “Test Method Validation” and “Gauge R&R” interchangeably. Overnight it seemed that method validation programs began to consist almost solely of Gauge R&R studies. However, the number of method validation observations continued to rise, and industry representatives tasked with defending TMV programs limited to Gauge R&R studies to agency inspectors, continued to struggle.

The industry came to the realization that this type of limited program was not capable of meeting the agency’s expectations with regard to validation programs.

The difficulty in providing a convincing defense for the use of the Gauge R&R is not due to a lack of experience in the field, this is not a new or innovative tool. Gauge R&R methods have been on the engineering scene for decades, the method’s structure, objectives, and faults are well understood.

Maybe that’s the problem.

We all know what type of study this is, and what it isn’t.

Gauge R&R:

IS: | developed to use probabilities to predict the potential for variability, to indicate a measurement system’s level of uncertainty (highlighting effects that are not well understood and variables that are not in control). |

ISN’T: | developed to confirm well understood limits of precision, or to demonstrate the stability, consistency or robustness of a method over time (demonstrating that all critical effects are understood and that there are no variables that are outside of our control). |

Simply put, this is not a method designed to demonstrate confidence in a method, as much as it is a tool designed to demonstrate a lack of confidence or a particular area of uncertainty. Taken in that context, the Gauge R&R was intended to be a starting point, providing an indication of where our methods fell out of control, such that we could intervene and change something in order to improve control.

The difference may seem subtle, but when it comes to statistical expressions of data, there is no such thing as a meaningless difference.

Attempting to use contemporary Gauge R&R methodology for any other purpose than this results in a myriad of statistical misrepresentations of the data collected. This blog is not intended to provide a tutorial in multivariate linear regression, but for those that are interested, follow this link to an effective and concise walk through of the computational logic behind the R&R methodology.

In the meantime, the following is a summary of the well-known issues with attempts to use a Gauge R&R study in this way faults:

- R&R study computations are based upon a random effects model, meaning that the calculations involved are based on the assumption that the effects observed are not correlated to the independent variables – but then the R&R attempts to use those calculations to quantify the correlation between the effects and the variable.

- Repeatability and Reproducibility are not additive, as reproducibility cannot occur except in conjunction with a repeatability error, it is misleading to consider them separate from each other.

- The R&R attempts to calculate and then compare measurement error, tolerances, and total variation as ratios (meaning that if taken together, they should, but do not, equal 100%)

- The R&R expresses Repeatability and Reproducibility as a percentage of specified tolerance and total variation which is misleading, since measurement error does not “consume” specified tolerance or total variation (meaning, the total variation does not decrease to represent total remaining when any variance is encountered)

- Gauge R&R studies do not address accuracy or stability in any way

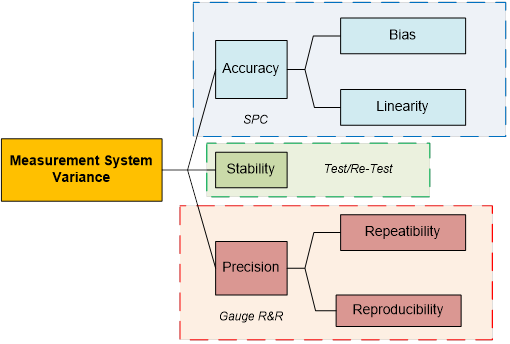

Let’s review the basic characteristics of any measurement system, which must all be addressed by a comprehensive test method validation program:

Accuracy (Average Measurement Value vs. Actual Value):

- Bias is a measure of the one sided distance between the average value of the measurements and the “True” or “Actual” value of the sample or part

- Linearity is a measure of the consistency of Bias over the range of the measurement device

- Repeatability assesses whether the same operator can measure the same part/sample multiple times with the same measurement device and get the same value.

- Reproducibility assesses whether different operators can measure the same part/sample with the same measurement device and get the same value.

As we can begin to see:

- a Gauge R&R alone is not an adequate analysis of the variance that may be inherent in a measurement system

- the computational logic and focus of a Gauge R&R has not been developed to demonstrate that our system is stable and capable of routinely meeting performance specifications

With those points in mind, let’s ask ourselves, why we should treat developing, validating and controlling a measurement system differently than we would a process? The short answer is, we shouldn’t. We should build our Test Method Validation components and programs the same way we build our process validation programs.

Process Validation programs understand that before equipment is qualified or processes can be validated, a data driven understanding of the process parameters, the potential control points, and their effect on the critical quality attributes of the process must be developed. Once these understandings are well documented and expressed in understandable process specifications and controlled via appropriate equipment operating ranges and procedures, only then can we make risk based decisions on the most appropriate experimental designs and sampling plans for each validation project, based on everything learned during the iterative development stage.

Now if we take the paragraph above and adapt it to address a process that measures, what should we have?

It becomes easier to see when you consider the model above, and contrast it to a TMV program that is limited in scope to a Gauge R&R spreadsheet, why those programs leave Quality Management in the proverbial and unenviable position of defending why we are placing round pegs through square holes.

If this paradigm exists in your workplace, and you find yourself in a position to challenge it, the first step could be as simple as changing language. Let’s try be as precise with our language as we are with our gauges.

- Try referring to a Measurement System as a Measurement Process.

Then, try these:

- Review all of the FDA guidance that has been offered for Process Validation

- Review the design/development work that is the output of your local design control procedures, including Gauge R&R studies

- Review your local procedures on the lifecycle involved in validation

- Align as much of the language as is possible (articulate your measurement process in terms of CPPs (e.g., equipment tolerance and range) and CQAs (e.g., observed variance, P/T))

- Try creating a table similar to the one above, and place the documents that exist within the table

- Note which cells of the table are empty and focus your efforts there

- Use the exercise to evaluate your current programs with procedures and tools that will ensure your future projects don’t result in tables that have blank cells

© Coda Corp USA 2014. All rights reserved.

Authored By:

Gina Guido-Redden

Chief Operating Officer

Coda Corp USA

“Quality is never an accident; it is the result of high intention, sincere effort, intelligent direction and skillful execution. It represents the wisest of many alternatives.”

NAVIGATION

NAVIGATION